Simulating the Flow of Natural Human Conversation

Turn-taking, interruption handling, backchanneling, and more.

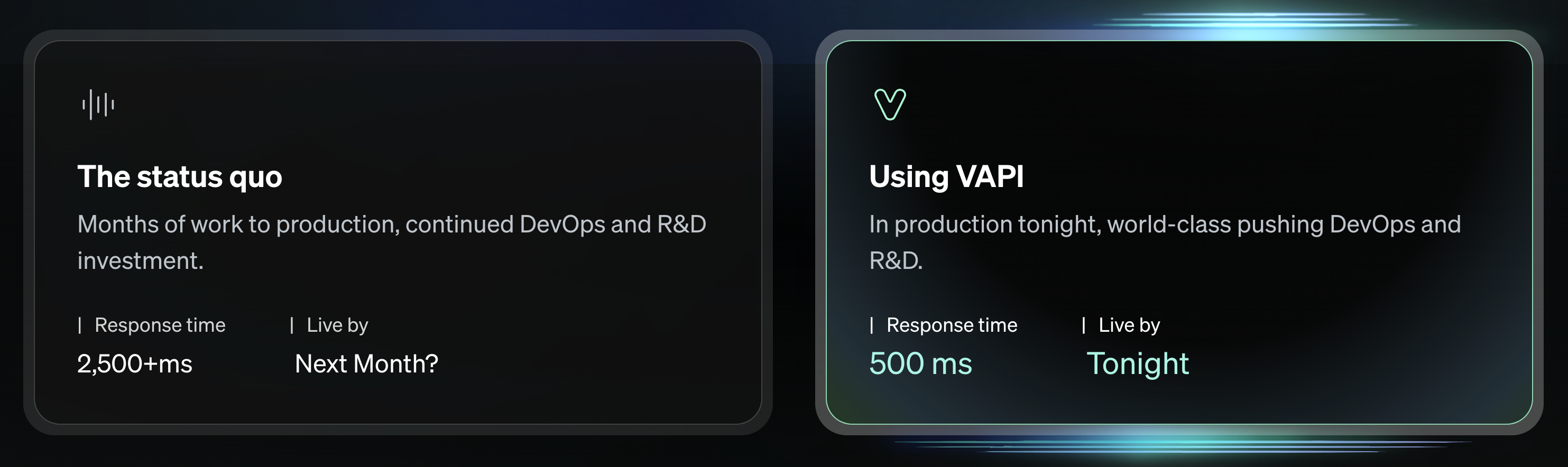

Realtime/Low Latency Demands

Responsive conversation demands low latency. Internationally. (<500-800ms voice-to-voice).

Taking Actions (Function Calling)

Taking actions during conversation, getting data to your services for custom actions.

Extracting Conversation Data

Review conversation audio, transcripts, & metadata.

Implemented from scratch, this functionality can take months to build, and large, continuous,

resources to maintain & improve.

Quickstart Guides

Get up & running in minutes with one of our quickstart guides:Common Workflows

Explore end-to-end examples for some common voice workflows:Outbound Sales

We’ll build an outbound sales agent that can schedule appointments.

Inbound Support

We’ll build an outbound sales agent that can schedule appointments.

Key Concepts

Gain a deep understanding of key concepts in Vapi, as well as how Vapi works:Explore Our SDKs

Our SDKs are open source, and available on our GitHub:Vapi Web

Add a Vapi assistant to your web application.

Vapi iOS

Add a Vapi assistant to your iOS app.

Vapi Flutter

Add a Vapi assistant to your Flutter app.

Vapi React Native

Add a Vapi assistant to your React Native app.

Vapi Python

Multi-platform. Mac, Windows, and Linux.

FAQ

Common questions asked by other users:Is Vapi right for my usecase?

Is Vapi right for my usecase?

If you are a developer building a voice AI application simulating human conversation (w/ LLMs — to whatever degree of application complexity) — Vapi is built for you.Whether you are building for a completely “turn-based” use case (like appointment setting), all the way to robust agentic voice applications (like virtual assistants), Vapi is tooled to solve for your voice AI workflow.Vapi runs on any platform: the web, mobile, or even embedded systems (given network access).

Sounds good, but I’m building a custom X for Y...

Sounds good, but I’m building a custom X for Y...

Not a problem, we can likely already support it. Vapi is designed to be modular at every level of the voice pipeline: Text-to-speech, LLM, Speech-to-text.You can bring your own custom models for any part of the pipeline.

- If they’re hosted with one of our providers: you just need to add your provider keys, then specify the custom model in your API requests.

- If they are hosted elsewhere: you can use the

Custom LLMprovider and specify the URL to your model in your API request.

Couldn’t I build this myself and save money?

Couldn’t I build this myself and save money?

You could (and the person writing this right now did, from scratch) — but there are good reasons for not doing so.Writing a great realtime voice AI application from scratch is a fairly challenging task (more on those challenges here). Most of these challenges are not apparent until you face them, then you realize you are 3 weeks into a rabbit hole that may take months to properly solve out of.Think of Vapi as hiring a software engineering team for this hard problem, while you focus on what uniquely generates value for your voice AI application.

But to address cost, the vast majority of cost in running your application will come from provider cost (Speect-to-text, LLM, Text-to-speech) direct with vendors (Deepgram, OpenAI, ElevenLabs, etc) — where we add no fee (vendor cost passes-through). These would have to be incurred anyway.Vapi only charges its small fee on top of these for the continuous maintenance & improvement of these hardest components of your system (which would have costed you time to write/maintain).No matter what, some cost is inescapable (in money, time, etc) to solve this challenging technical problem.Our focus is solely on foundational Voice AI orchestration, & it’s what we put our full time and resources into.To learn more about Vapi’s pricing, you can visit our pricing page.

But to address cost, the vast majority of cost in running your application will come from provider cost (Speect-to-text, LLM, Text-to-speech) direct with vendors (Deepgram, OpenAI, ElevenLabs, etc) — where we add no fee (vendor cost passes-through). These would have to be incurred anyway.Vapi only charges its small fee on top of these for the continuous maintenance & improvement of these hardest components of your system (which would have costed you time to write/maintain).No matter what, some cost is inescapable (in money, time, etc) to solve this challenging technical problem.Our focus is solely on foundational Voice AI orchestration, & it’s what we put our full time and resources into.To learn more about Vapi’s pricing, you can visit our pricing page.

Is it going to be hard to set up?

Is it going to be hard to set up?

No — in fact, the setup could not be easier:

- Web Dashboard: It can take minutes to get up & running with our dashboard.

- Client SDKs: You can start calls with 1 line of code with any of our client SDKs.

How is Vapi different from other Voice AI services?

How is Vapi different from other Voice AI services?

Vapi focuses on developers. Giving developers modular, simple, & robust tooling to build any voice AI application imaginable.Vapi also has some of the lowest latency & (equally important) highest reliability amongst any other voice AI platform built for developers.